A few weeks ago, Italian journalist Francesca Barra opened her laptop to find something that would change the way she saw technology forever AI-generated nude images of herself, posted without consent on a pornographic forum.

She described feeling “violated and mortified” and the most piercing question came from her young daughter:

“If it happened to me, how would I handle it?”

That’s the question every parent and teacher now needs to ask. Because what happened to famous women like Francesca Barra, Giorgia Meloni, and Sophia Loren isn’t a distant headline, it’s a warning about what’s already possible for anyone with a public photo online.

What’s happening

Online forums such as Social Media Girls and Phica were discovered to be hosting AI-generated, sexualised “nudified” images of well-known women in Italy. These weren’t real photos, they were fabricated using artificial-intelligence tools that can remove clothing or stitch a person’s face onto another body with shocking realism.

The sites are now under investigation, and Italy has passed Europe’s first law criminalising the illegal use of AI to spread harmful content. But this problem doesn’t stop at Italy’s borders. The same technology is freely available online and it’s being used on ordinary people, including teenagers.

How common is this?

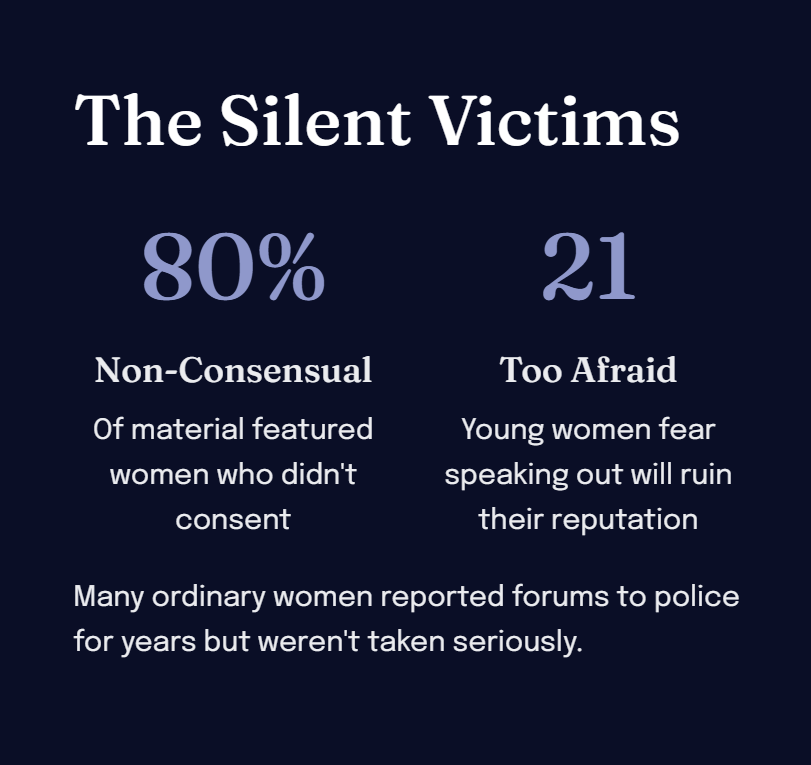

This isn’t a rare or “celebrity-only” problem. The numbers are startling:

- A multinational study across ten countries found that 1 in 5 adults (22.6%) have experienced some form of image-based sexual abuse.

- UK research shows 13% of children have already come across or been involved in “nude deepfakes” either seeing, receiving, or being targeted by one.

- The Internet Watch Foundation (IWF) found thousands of AI-generated child sexual-abuse images appearing in just one month of monitoring dark-web forums.

- Internet Matters reported a 2,000% rise in online searches and referral links to “undress AI” sites between 2022 and 2023.

These tools are spreading faster than most parents or teachers realise and they’re often free to use.

The apps behind it

A quick search will reveal dozens of so-called “undress AI” or “nudify” apps that promise to “reveal what’s underneath” any photo.

Many are disguised as “fun” image filters or “AI photo editors,” but they allow users to:

- Upload a photo of a clothed person

- Use AI to remove clothing or alter the image

- Download or share the fake nude

Most of these platforms are unregulated, store uploaded photos on their servers, and make it almost impossible to delete data. While many claim to ban under-18 content, enforcement is virtually nonexistent.

Key message for parents and schools: even if your child never uses such an app, their images from social media could still be misused by others.

Why this matters

Experts describe this kind of manipulation as “virtual rape”, a form of sexual violence that strips victims of dignity and autonomy.

The psychological impact can be severe: shame, panic, and long-term fear of being disbelieved.

Younger victims often stay silent because they think:

- “No one will believe me.”

- “It’s my fault for posting photos.”

- “It will ruin my reputation.”

These are exactly the barriers that prevent victims from getting help.

What parents and teachers can do

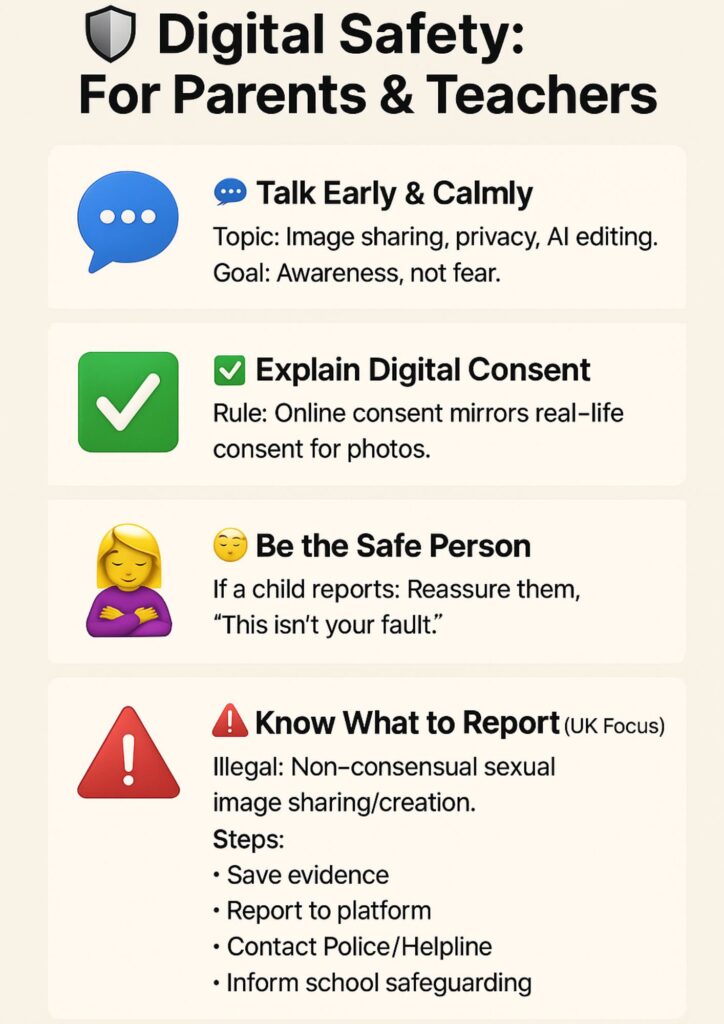

1. Talk early and calmly

Start conversations about image sharing, privacy, and AI editing.

Ask: “Have you heard about apps that can change photos? What do you think about that?”

The goal isn’t fear, it’s awareness.

2. Explain digital consent

Make sure children understand that consent applies online too:

“If someone wouldn’t want you to share their photo in real life, the same rule applies online.”

3. Be the safe person

If your child ever finds a fake or compromising image, the first thing they need is reassurance, not blame.

Say: “You’ve done the right thing telling me. This isn’t your fault.”

4. Know what to report

In the UK, sharing or creating a sexual image of someone without consent is illegal under image-based abuse laws.

Steps to take:

- Save evidence (screenshots, URLs)

- Report it to the platform immediately

- Contact the police or the Revenge Porn Helpline

- Inform your school’s safeguarding lead if a child is involved

5. Teach critical thinking

Remind young people: “Just because you see it online doesn’t mean it’s real.”

Deepfakes and nudified images can be used to manipulate, shame, or extort people, recognising that early is a form of protection.

A cultural change is needed

Technology is evolving faster than legislation, but we don’t have to wait for the next law to protect our children.

We can:

- Model respectful behaviour online

- Teach empathy over mockery

- Challenge the idea that sharing fake or sexualised images is “just banter”

Because the real fix isn’t only about blocking apps it’s about building respect for consent in every digital space.

Final thought

AI can create art, solve problems, and connect us in wonderful ways.

But without boundaries, it can also strip people of safety and dignity.

As Francesca Barra said:

“I will not allow anyone to violate me twice.”

Let’s make sure no child or adult ever has to say those words again.

Start the conversation today.